A collaborative moving image production made especially for ‘In the Dark’. The video made two journeys to each artist.

Participating London Group artists: Bryan Benge, Sandra Crisp, Stephen Carley and Genetic Moo. 2019

This film was exhibited in The London Group and Friends show ‘In The Dark’, an experimental and collaborative group show with the Computer Art Society and Lumen Prize held in The Cello Factory, London 17-19 Jan 2019.

It was really great to take part in this collaborative process – Like clicking the refresh button on an often solitary practice of making videos. I decided in advance to rediscover abandoned or incomplete video clip fragments on my hard drive and contribute these to the piece.

However, as a response to clips added by the other artists I also added bits of recent video work in progress. Footage added by me was mainly dynamic 3D imagery textured with online video clips and also a Street View screencast which uses Google’s history function to look back at particular locations in time – In this case the Heygate Estate, Elephant & Castle, London back to 2012 when I last visited and before it was finally demolished. The Street view clip is overlaid with a rotating geodesic polyhedron, slowly cycling as if capturing data as it goes.

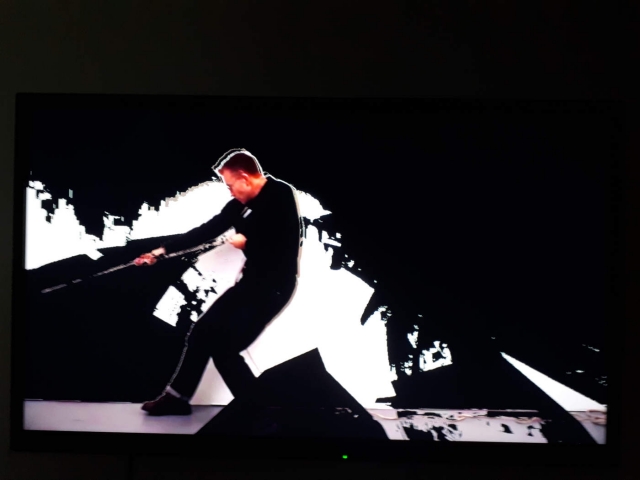

Some favourite collisions between mine and other artist’s work in the video includes the appearance of Bryan’s fantastical figures, Stephen’s urban people and his rope-performance weaving through my 3D scenes of animated textured cubes. Creating totally surreal scenes that I could never have imagined – Humans moving through a blackened and dystopic, glitched landscape. In some parts sublime and slightly terrifying, in others flickering through the ink towards the light and out the other side.

Eventually the sequence of contrasting imagery from all artists emerged as an interesting if slightly discombobulated structure near the end of the Excuisite Corpse process and then Tim created a really great threshold effect to bring the whole thing together…

Here’s an explanation of the editing process.

The film clips as everyone had edited them was 4 minutes long.

I wrote a program to turn the film into jpgs (2400 of them) at 10 fps.

The I wrote another program to create 2 playheads. They were 500 frames apart. These playheads go through the folder of jpegs and get up 2 images. One image is then passed through the other using a threshold. Anything above the threshold lets the other image through – anything below is made black. I wanted to keep the film quite dark. The play heads go through all 2400 frames twice so you end up with 4800 new frames which is put back together into an 8 minute film.After doing this I realised I can use the same technique in a generative way by changing the distance of the playheads each time through thus making a film which varies over many hours – running from a computer – we’ll be using this technique for some future Genetic Moo films, so the process has been very useful for us – thrown up new techniques and ideas.

We loved the mix of aesthetics involved – that really made the end result intriguing and Stephen’s pulling clip which repeats a lot because Stephen had put it in 4 times, double up to 8, made it feel like a struggle against some impending blackness. In fact with Sandra’s data surveillance and Tim’s spidery algorithms and mix of weird sexual and military images it definietly had a internet dystopian feel to it – which makes sense if you think about it. I might expand these thoughts into an essay for the news letter next time.

Tim